Last week, I shared some thought about the fact that any modern quality process that aims to deliver value in today software development should span across the whole SDLC.

As a consequence, quality assessments and testing don’t happen only at the end of the development, but they are imbue into every single step of the process. Keeping track of what has been assessed and validated and what hasn’t yet isn’t always straightforward, especially while migrating to new processes. To help with that, there are several frameworks that can be used to assess and organize testing and quality practices. Within a couple of organizations I’ve worked at, one that I’ve found effective is Testing Quadrants, initially described by Brian Marick. While it’s been mainly developed in relation to Testing, its scope can be easily expanded to help assess quality coverage and make sure the right people and resources are available at every step. The framework analyses and categorizes quality activities in four groups (quadrants).

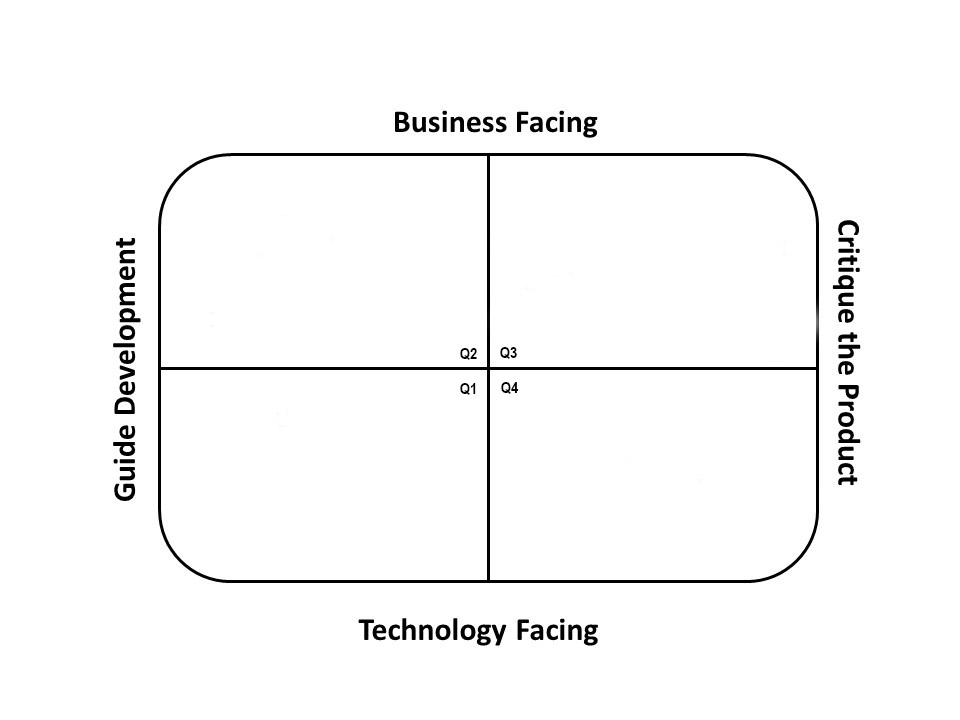

Quadrants on the left side cover the practices that guide development. Quadrants on the right side, the ones that critique the developed product. The Top half of the quadrants focus on the question: “Are we building the right thing?” (cfr Agile Testing by Janet Gregory and Lisa Crispin), so are the ones more business oriented. The bottom half ones are more technology oriented.

Quadrants are usually numbered for ease of reference:

- Q1 covers technology-facing quality activities that guide development.

- Q2 covers business-facing quality activities that guide development.

- Q3 covers business-facing quality activities that critique the product.

- Q4 covers technology-facing quality activities that critique the product.

Note that the numbers don’t imply any order in the activities. In fact, for example, in a typical TDD flow, we will likely follow a Q2→Q1→Q4→Q3 sequence. What you have to pay attention to is to have some activity/test in every quadrant. If you don’t, there’s likely an uncovered area in your process.

As we said, Q2 focuses on business-facing activities that guide development. These are an important foundation in almost all teams as that’s the time to make sure there’s alignment between the stakeholder requests and the development activities. Some teams use behaviour driven development (BDD), Acceptance Test Driven Development (ATDD), or specification by example (SBE) as tools to turn specifications into executable tests.

At the company I work at, we have kick-off meetings, where product owners, user experience experts, business stakeholders, marketing people, and engineers meet to sync on the project, examine Proof of Concepts, answer outstanding questions, evaluate potential blockers, analyse pre-mortem issues.

Q1 focuses on technology-facing activities that guide development. This means developing “helpers” to ensure that the code meets the technical requirements. Q1 is one of the quadrants where most, if not all, activities can be automated. For example, teams that practice TDD will write unit tests for small pieces of functionality and, then, the code to make them pass. These tests should be designed to run quickly as they are meant to give teams fast feedback and high confidence to make changes to the code quickly and fearlessly in the future. UI and integration tests also fall for the most in this area.

Q4 focuses on technology-facing activities that evaluate the product. As for Q1, this is usually achieved by automated tests. Feature level and e2e tests fall into this category as they help evaluate the product quality at different levels. Performance tests, security tests, app size monitoring, memory usage tests, and other non- functional tests also fall into Q4. These tend to be areas often covered only by production monitoring, but “shifting left” here has a huge potential for improving the perceived quality of your app.

Finally Q3 focuses on business facing tests that evaluate the product, which basically means validating that the changes provide the intended value to users in terms of features and quality. Activities in this area tend to be more human-centric, less automatable than the ones in the other quadrants (though some automation may help executing them faster and more reliably). Exploratory testing and usability testing are typical activities that fall in this quadrant. And production monitoring also falls into this group. At work, we have Product Reviews meetings, where all the stakeholders who kicked-off the feature development get back together to evaluate it before shipping.

As you can see, this framework covers all the most important areas and is highly customizable, so, using it, it should become a little bit easier to assess when a feature is “done” and it’s ready to be released in production.